Orthogonal Persistence - The Future of Data Management

Understanding orthogonal persistence and how ICP canisters revolutionize data management by making persistence transparent to application logic

What is Orthogonal Persistence?

Orthogonal Persistence is a revolutionary concept in computer science where data persistence is completely independent of the programming language, data structures, and application logic. In an orthogonally persistent system, objects and data structures persist automatically and transparently, without requiring explicit save/load operations.

The term "orthogonal" refers to the separation of concerns - persistence is orthogonal (independent) to the core application logic, much like how memory management is orthogonal to algorithmic complexity.

In ICP's implementation, orthogonal persistence creates the illusion that programs run forever without crashing or losing state. The runtime handles persistence transparently, eliminating the need for explicit file operations or database calls.

Official ICP Definition

According to the Internet Computer documentation, orthogonal persistence is the ability for a program to automatically preserve its state across transactions and canister upgrades without requiring manual intervention. This means that data persists seamlessly, without the need for a database, stable memory APIs, or specialized stable data structures.

Although Motoko's persistence model is complex under the hood, it's designed to be both safe and efficient. By simply using the persistent (actors) or stable (data structures) keyword, developers can mark pieces of their program as persistent. This abstraction significantly reduces the risk of data loss or corruption during upgrades.

In contrast, other canister development languages like Rust require explicit handling of persistence. Developers must manually manage stable memory and use specialized data structures to ensure data survives upgrades. These languages lack orthogonal persistence, and may rearrange memory unpredictably during recompilation or runtime, making safe persistence more error-prone and labor-intensive.

The Traditional Persistence Problem

Traditional software development treats persistence as a major architectural concern that complicates application design. Developers must manually manage data serialization, handle database transactions, and implement error recovery - all while keeping persistence logic separate from business logic.

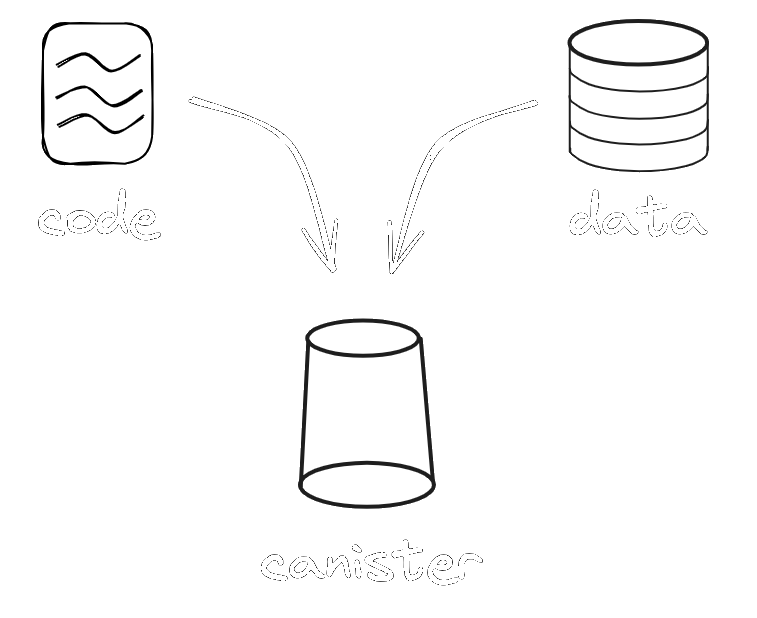

Orthogonal Persistence in ICP Canisters

The Internet Computer Protocol brings orthogonal persistence to blockchain applications through canisters - sophisticated smart contracts with automatic data persistence.

Enhanced Orthogonal Persistence (EOP)

ICP features Enhanced Orthogonal Persistence (EOP) as the default persistence model in Motoko:

Key Features of EOP:

- Stable heap: Main memory persists across canister upgrades without copying

- 64-bit memory support: Scales beyond 4GB, approaching stable memory capacity

- Fast upgrades: No serialization overhead - upgrades complete in milliseconds

- Type-driven safety: Automatic compatibility checking prevents data corruption

- Incremental garbage collection: Efficient memory management with partitioned heaps

Comparison with Classical Persistence:

| Aspect | Classical Persistence | Enhanced Orthogonal Persistence |

|---|---|---|

| Upgrade Speed | Slow (serialize/deserialize) | Fast (memory retention) |

| Scalability | Limited to 2GB | Scales to 64-bit memory |

| Safety | Manual stable memory management | Automatic type checking |

| Complexity | High (explicit stable structures) | Low (transparent persistence) |

| Default Status | Legacy (deprecated) | Default since v0.15.0 |

For a detailed comparison, see the "Enhanced vs Classical Orthogonal Persistence" section below.

Enhanced vs Classical Orthogonal Persistence

Motoko features two implementations for orthogonal persistence:

Enhanced Orthogonal Persistence (EOP)

Enhanced orthogonal persistence implements the vision of efficient and scalable orthogonal persistence in Motoko that combines:

- Stable heap: Persisting the program's main memory across canister upgrades.

- 64-bit heap: Extending the main memory to 64-bit for large-scale persistence.

As a result, the use of secondary storage (explicit stable memory, dedicated stable data structures, DB-like storage abstractions) will no longer be necessary: Motoko developers can directly work on their normal object-oriented program structures that are automatically persisted and retained across program version changes.

Key Advantages:

- Performance: New program versions directly resume from the existing main memory and have access to the memory-compatible data.

- Scalability: The upgrade mechanism scales with larger heaps and in contrast to serialization, does not hit IC instruction limits.

- Simplicity: Developers do not need to deal with explicit stable memory.

- Safety: Built-in compatibility checks prevent data corruption during upgrades.

Technical Implementation:

- Extension of the IC to retain main memory on upgrades

- Supporting 64-bit main memory on the IC

- A long-term memory layout that is invariant to new compiled program versions

- A fast memory compatibility check performed on each canister upgrade

- Incremental garbage collection using a partitioned heap

Compatibility Changes: Compatible changes for immutable types include:

- Adding or removing actor fields

- Changing mutability of actor fields (let to var and vice-versa)

- Removing object fields

- Adding variant fields

- Changing Nat to Int

- Supporting shared function parameter contravariance and return type covariance

The runtime system checks migration compatibility on upgrade, and if not fulfilled, rolls back the upgrade.

Classical Orthogonal Persistence

Classical orthogonal persistence is the legacy implementation that has been superseded by enhanced orthogonal persistence. On an upgrade, the runtime system first serializes the persistent data to stable memory and then deserializes it back again to main memory.

Key Disadvantages:

- At maximum, 2 GiB of heap data can be persisted across upgrades

- Shared immutable heap objects can be duplicated, leading to potential state explosion

- Deeply nested structures can lead to call stack overflow

- The serialization and deserialization is expensive and can hit ICP's instruction limits

- No built-in stable compatibility check in the runtime system

Migration from Classical to Enhanced: When migrating from classical to enhanced persistence, the old data is deserialized one last time from stable memory and then placed in the new persistent heap layout. Once operating on the persistent heap, the system should prevent downgrade attempts.

For projects requiring transition, graph-copy-based stabilization can be performed in three steps:

Initiate explicit stabilization before upgrade:

dfx canister call CANISTER_ID __motoko_stabilize_before_upgrade "()"Run the upgrade:

dfx deploy CANISTER_IDComplete explicit destabilization after upgrade:

dfx canister call CANISTER_ID __motoko_destabilize_after_upgrade "()"

Comparison Summary

| Aspect | Enhanced Orthogonal Persistence | Classical Orthogonal Persistence |

|---|---|---|

| Default Status | Default since Motoko v0.15.0 | Deprecated, legacy mode |

| Memory Limit | 64-bit memory, scales beyond 4GB | Limited to 2GB max |

| Upgrade Speed | Fast (milliseconds) | Slow (serialize/deserialize) |

| Scalability | Scales independently of heap size | Limited by serialization costs |

| Safety | Runtime compatibility checks | No built-in checks, relies on dfx warnings |

| Memory Handling | Retains Wasm main memory | Serializes to stable memory |

| Data Integrity | Prevents state explosion | Can duplicate shared data |

| Developer Experience | No explicit stable memory needed | Requires manual stable memory management |

Since version 0.15.0, the moc compiler enables enhanced orthogonal persistence by default. Classical orthogonal persistence can only be re-enabled with the compiler flag --legacy-persistence. Although it is possible to upgrade from classical to enhanced, downgrades are not supported.

As a safeguard, upgrades from classical to enhanced will fail unless the new code is compiled with flag --enhanced-orthogonal-persistence explicitly set.

Implementation Details

Memory Management

EOP divides memory into 4096-byte pages and uses page maps to track changes:

- Page deltas: Track modified memory pages during execution

- Checkpoints: Periodic full memory snapshots for efficiency

- Incremental GC: Garbage collection that works with persistent heaps

Actor Model Integration

Actors provide natural persistence boundaries:

- State snapshots occur between message processing

- Memory pages track changes automatically

- No explicit commit operations needed

- Concurrent execution with isolated memory spaces

Upgrade Safety

EOP includes rigorous safety mechanisms:

- Type compatibility checks: Ensures data structure changes are safe

- Implicit migrations: Handles simple type changes automatically

- Custom migration support: Complex changes can be programmed

- Memory layout invariance: Stable memory layout across versions

Motoko Implementation

Stable Variables

// Enhanced orthogonal persistence - automatic and transparent

actor UserManager {

// This HashMap persists automatically across upgrades

stable var users = HashMap.HashMap<UserId, User>(0, Text.equal, Text.hash);

// Regular variables also persist in EOP

var activeSessions = HashMap.HashMap<SessionId, Session>(0, Text.equal, Text.hash);

public func createUser(userData: User) : async UserId {

let userId = generateId();

users.put(userId, userData); // Persists immediately

return userId;

};

public func getUser(userId: UserId) : async ?User {

users.get(userId); // Always returns current persisted data

};

};

Stable Keyword Usage

// Mark persistent data structures with 'stable'

stable var userCount : Nat = 0;

stable var userProfiles : HashMap.HashMap<Text, Profile> = HashMap.HashMap(0, Text.equal, Text.hash);

// Regular variables also persist in EOP

var sessionCache : HashMap.HashMap<Text, Session> = HashMap.HashMap(0, Text.equal, Text.hash);

Declaring Stable Variables

Within an actor, you can configure which part of the program is considered to be persistent (retained across upgrades) and which part is ephemeral (reset on upgrades).

More precisely, each let and var variable declaration in an actor can specify whether the variable is stable or transient. If you don't provide a modifier, the variable is assumed to be transient by default.

- stable means that all values directly or indirectly reachable from that stable variable are considered persistent and are automatically retained across upgrades. This is the primary choice for most of the program's state.

- transient means that the variable is re-initialized on upgrade such that the values referenced by the transient variable are discarded, unless the values are transitively reachable by other variables that are stable. transient is only used for temporary state or references to high-order types, such as local function references.

You can only use the stable, transient (or legacy flexible) modifier on let and var declarations that are actor fields. You cannot use these modifiers anywhere else in your program.

Starting with Motoko v0.13.5, if you prefix the actor keyword with the keyword persistent, then all let and var declarations of the actor or actor class are implicitly declared stable. Only transient variables will need an explicit transient declaration.

Using a persistent actor can help avoid unintended data loss. It is the recommended declaration syntax for actors and actor classes. The non-persistent declaration is provided for backwards compatibility.

persistent actor Counter {

var value = 0; // implicitly stable!

public func inc() : async Nat {

value += 1;

return value;

};

}

Stable Types

The Motoko compiler must ensure that stable variables are compatible with the upgraded program. To achieve this, every stable variable must have a stable type. A type is stable if removing all var modifiers from it results in a shared type.

The only difference between stable types and shared types is the former's support for mutation. Like shared types, stable types are restricted to first-order data, excluding local functions and structures built from local functions (such as class instances). Excluding local functions is required because the meaning of a function value, consisting of both data and code, cannot easily be preserved across an upgrade while the value of plain data, mutable or not, can be.

In general, classes are not stable because they can contain local functions. However, a plain record of stable data is a special case of object types that are stable. Moreover, references to actors and shared functions are also stable, allowing you to preserve their values across upgrades.

Converting Non-Stable Types into Stable Types

For variables that do not have a stable type, there are two options for making them stable:

Use a stable module for the type, such as:

- StableBuffer

- StableHashMap

- StableRBTree

Unlike stable data structures in the Rust CDK, these modules do not use stable memory but instead rely on orthogonal persistence. The adjective "stable" only denotes a stable type in Motoko.

Extract the state in a stable type and wrap it in the non-stable type.

For example, the stable type TemperatureSeries covers the persistent data, while the non-stable type Weather wraps this with additional methods (local function types).

persistent actor {

type TemperatureSeries = [Float];

class Weather(temperatures : TemperatureSeries) {

public func averageTemperature() : Float {

var sum = 0.0;

var count = 0.0;

for (value in temperatures.values()) {

sum += value;

count += 1;

};

return sum / count;

};

};

var temperatures : TemperatureSeries = [30.0, 31.5, 29.2];

transient var weather = Weather(temperatures);

};

Discouraged and not recommended: Pre- and post-upgrade hooks allow copying non-stable types to stable types during upgrades. This approach is error-prone and does not scale for large data. Per best practices, using these methods should be avoided if possible. Conceptually, it also does not align well with the idea of orthogonal persistence.

Upgrade Safety

When upgrading a canister, it is important to verify that the upgrade can proceed without:

- Introducing an incompatible change in stable declarations.

- Breaking clients due to a Candid interface change.

With enhanced orthogonal persistence, Motoko rejects incompatible changes of stable declarations during an upgrade attempt. Moreover, dfx checks the two conditions before attempting the upgrade and warns users as necessary.

A Motoko canister upgrade is safe provided:

- The canister's Candid interface evolves to a Candid subtype. You can check valid Candid subtyping between two services described in .did files using the didc tool with argument check file1.did file2.did.

- The canister's Motoko stable signature evolves to a stable-compatible one.

With classical orthogonal persistence, the upgrade can still fail due to resource constraints. This is problematic as the canister can then not be upgraded. It is therefore strongly advised to test the scalability of upgrades extensively. This does not apply to enhanced orthogonal persistence.

Upgrading a Canister

If you have a Motoko canister that has already been deployed, then you make changes to that canister's code and want to upgrade it, the command dfx deploy will check that the interface is compatible, and if not, displays a warning:

"You are making a BREAKING change. Other canisters or frontend clients relying on your canister may stop working."

Motoko canisters using enhanced orthogonal persistence implement an extra safeguard in the runtime system to ensure that the stable data is compatible to exclude any data corruption or misinterpretation. Moreover, dfx also warns about incompatibility and dropping stable variables.

Data Migration

Often, data representation changes with a new program version. For orthogonal persistence, it is important the language is able to allow flexible data migration to the new version.

Motoko supports two kinds of data migrations: Implicit migration and explicit migration.

Implicit Migration Migration is automatically supported when the new program version is stable-compatible with the old version. The runtime system of Motoko then automatically handles the migration on upgrade.

More precisely, the following changes can be implicitly migrated:

- Adding actor fields.

- Changing the mutability of an actor field.

- Adding variant fields.

- Changing Nat to Int.

- Any change that is allowed by Motoko stable subtyping rules. These are similar to Motoko subtyping, but stricter, and do not allow dropping of record fields or promotion to the type Any, either of which can result in data loss.

Motoko versions prior to v0.14.6 allowed actor fields to be dropped or promoted to Any, but such changes now require explicit migrations. The rules have been strengthened to prevent accidental loss of data.

Explicit Migration More complex migration patterns, which involve non-trivial data transformations, are possible. However, they require additional coding effort and careful handling.

One common approach is to replace a set of stable variables with new ones of different types through a sequence of upgrade steps. Each step incrementally transforms the program state, ultimately producing the desired structure and values.

A cleaner, more maintainable solution, is to declare an explicit migration expression that is used to transform a subset of existing stable variables into a subset of replacement stable variables.

Both of these data migration paths are supported by static and dynamic checks that prevent data loss or corruption. A user may still lose data due to coding errors, so should tread carefully.

Legacy Features

Using the pre- and post-upgrade system methods is discouraged. It is error-prone and can render a canister unusable. In particular, if a preupgrade method traps and cannot be prevented from trapping by other means, then your canister may no longer be upgraded and is stuck with the existing version.

Per best practices, using these methods should be avoided if possible.

Motoko supports user-defined upgrade hooks that run immediately before and after an upgrade. These upgrade hooks allow triggering additional logic on upgrade. They are declared as system functions with special names, preupgrade and postupgrade. Both functions must have type : () → ().

If preupgrade raises a trap, hits the instruction limit, or hits another IC computing limit, the upgrade can no longer succeed and the canister is stuck with the existing version.

postupgrade is not needed, as the equal effect can be achieved by introducing initializing expressions in the actor, e.g. non-stable let expressions or expression statements.

CanDB Integration

For applications requiring advanced data management, CanDB provides orthogonally persistent database functionality with horizontal scaling:

import CanDB "mo:candb/CanDB";

import Entity "mo:candb/Entity";

actor UserDB {

// CanDB manages persistence automatically

let db = CanDB.init();

public func createUser(userData: User) : async Text {

// Data persists without explicit operations

await db.insert(userData);

};

public func getUser(userId: Text) : async ?User {

// Always returns current persisted data

await db.get(userId);

};

public func updateUser(userId: Text, updates: UserUpdates) : async () {

// Updates persist automatically

await db.update(userId, updates);

};

};

Benefits of Orthogonal Persistence

Simplified Development

- No ORM complexity: Direct object manipulation

- No serialization layers: Data persists as native objects

- Reduced boilerplate: Focus on business logic, not persistence

Enhanced Reliability

- Atomic operations: Changes persist or fail completely

- Automatic consistency: No manual transaction management

- Crash recovery: Data integrity maintained across failures

Better Performance

- Reduced I/O: No explicit disk/network operations

- Memory efficiency: Objects loaded on-demand

- Optimized storage: Database handles physical storage automatically

Developer Productivity

- Faster development: Less code to write and maintain

- Fewer bugs: Automatic persistence eliminates common errors

- Easier testing: No mock databases or persistence layers needed

Orthogonal Persistence vs Traditional Approaches

| Aspect | Traditional Persistence | Orthogonal Persistence |

|---|---|---|

| Code Complexity | High (ORMs, DAOs) | Low (direct object use) |

| Data Consistency | Manual (transactions) | Automatic (atomic) |

| Error Handling | Complex (rollback logic) | Simple (exception handling) |

| Performance | Variable (optimization needed) | Optimized (system managed) |

| Scalability | Limited (manual sharding) | Automatic (horizontal scaling) |

| Maintenance | High (schema migrations) | Low (automatic adaptation) |

Real-World Applications of Orthogonal Persistence

Social Media Platforms

actor SocialPlatform {

// User posts persist automatically

var posts = HashMap.HashMap<PostId, Post>(0, Text.equal, Text.hash);

// User relationships persist automatically

var follows = HashMap.HashMap<UserId, [UserId]>(0, Text.equal, Text.hash);

public func createPost(content: Text) : async PostId {

let postId = generateId();

let post = { id = postId; content = content; timestamp = now() };

posts.put(postId, post); // Persists immediately

return postId;

};

public func followUser(followerId: UserId, targetId: UserId) : async () {

let currentFollowing = switch (follows.get(followerId)) {

case (?list) list;

case null [];

};

follows.put(followerId, Array.append(currentFollowing, [targetId]));

};

};

E-commerce Applications

actor EcommerceStore {

// Product catalog persists automatically

var products = HashMap.HashMap<ProductId, Product>(0, Text.equal, Text.hash);

// Shopping carts persist automatically

var carts = HashMap.HashMap<UserId, ShoppingCart>(0, Text.equal, Text.hash);

public func addToCart(userId: UserId, productId: ProductId, quantity: Nat) : async () {

let cart = switch (carts.get(userId)) {

case (?existing) existing;

case null { items = []; total = 0; };

};

// Update cart - persists automatically

let updatedCart = updateCartItems(cart, productId, quantity);

carts.put(userId, updatedCart);

};

};

Gaming Applications

actor GameState {

// Player progress persists automatically

var playerStates = HashMap.HashMap<PlayerId, PlayerState>(0, Text.equal, Text.hash);

// Game world state persists automatically

var worldState = { level = 1; activePlayers = 0; events = [] };

public func updatePlayerPosition(playerId: PlayerId, position: Position) : async () {

switch (playerStates.get(playerId)) {

case (?state) {

let updatedState = { state with position = position };

playerStates.put(playerId, updatedState);

};

case null { /* handle new player */ };

};

};

};

Implementing Orthogonal Persistence

Configuration in ICP

Enabling Enhanced Orthogonal Persistence

EOP is enabled by default in Motoko since version 0.15.0. For projects requiring it explicitly:

// dfx.json configuration

{

"canisters": {

"my-canister": {

"main": "main.mo",

"type": "motoko",

"args": "--enhanced-orthogonal-persistence"

}

}

}

Compiler Flags

--enhanced-orthogonal-persistence: Explicitly enable EOP (usually unnecessary)--legacy-persistence: Revert to classical persistence (deprecated)--disable-persistence-safeguard: Allow upgrades from classical to enhanced

Design Principles

Persistence Transparency

- Application code should be unaware of persistence mechanics

- Data access should feel like regular memory operations

- Persistence should be automatic and invisible to developers

Type Safety

- Persistent data should maintain type information

- No runtime type casting or conversion needed

- Compile-time guarantees for data integrity

Concurrency Control

- Automatic handling of concurrent access

- Optimistic concurrency with automatic conflict resolution

- Transaction-like semantics without explicit transactions

Schema Evolution

- Automatic adaptation to data structure changes

- Backward compatibility for existing data

- Migration support for complex changes

Best Practices

Data Modeling

// Use stable types for long-term persistence

type User = {

id: Text;

username: Text;

email: Text;

profile: Profile;

created: Time;

lastLogin: ?Time;

};

// Prefer immutable updates

func updateUser(userId: Text, updates: UserUpdates) : async () {

switch (users.get(userId)) {

case (?user) {

let updatedUser = {

user with

email = switch (updates.email) { case (?e) e; case null user.email };

lastLogin = ?Time.now();

};

users.put(userId, updatedUser);

};

case null { /* error */ };

};

};

Performance Optimization

// Use appropriate data structures for access patterns

actor OptimizedStore {

// Fast lookup by ID

let byId = HashMap.HashMap<ItemId, Item>(0, Text.equal, Text.hash);

// Fast lookup by category

let byCategory = HashMap.HashMap<Category, [ItemId]>(0, Text.equal, Text.hash);

// Index for search operations

let searchIndex = Trie.Trie<Text, [ItemId]>(Text.equal, Text.hash);

};

Upgrade Strategies

Safe Upgrades with EOP

- Type compatibility: Ensure new types are compatible with existing data

- Testing: Thoroughly test upgrades in development environment

- Backup recovery: Have data recovery mechanisms ready

- Gradual rollout: Test upgrades on staging before production

Migration Patterns

// Custom migration for complex changes

actor MigratingCanister {

stable var usersV1 : ?[UserV1] = null; // Old format

stable var usersV2 : HashMap.HashMap<Text, UserV2> = HashMap.HashMap(0, Text.equal, Text.hash); // New format

system func preupgrade() {

// Prepare data for migration if needed

};

system func postupgrade() {

// Perform migration from V1 to V2

switch (usersV1) {

case (?oldUsers) {

for (user in oldUsers.vals()) {

let newUser = migrateUserV1ToV2(user);

usersV2.put(user.id, newUser);

};

usersV1 := null; // Clean up old data

};

case null { /* already migrated */ };

};

};

};

Challenges and Solutions

Memory Limits

Challenge: Canister memory is limited Solution: Use CanDB for horizontal scaling across multiple canisters

Upgrade Safety

Challenge: Code upgrades must preserve data integrity Solution: Use stable memory regions and careful upgrade procedures

Query Complexity

Challenge: Complex queries require indexing Solution: Implement custom indexes alongside primary data structures

The Future of Orthogonal Persistence

As ICP and similar technologies mature, orthogonal persistence will become the standard for application development:

Integration with Traditional Systems

- Bridges to existing databases

- Hybrid persistence models

- Migration tools for legacy applications

Advanced Features

- Time travel: Access historical data states

- Multi-version concurrency: Advanced conflict resolution

- Distributed persistence: Cross-canister data consistency

Developer Tools

- Visual persistence explorers: Debug persistent data

- Automatic optimization: Performance tuning tools

- Migration assistants: Schema evolution helpers

Conclusion

Orthogonal persistence represents a fundamental shift in how we think about data management in applications. By making persistence transparent and automatic, developers can focus on building features rather than managing data storage.

ICP canisters bring orthogonal persistence to blockchain applications, enabling:

- Simpler code: No explicit persistence logic

- Better reliability: Automatic consistency and atomicity

- Higher performance: System-optimized storage and retrieval

- Greater productivity: Focus on business logic, not infrastructure

As more developers experience the benefits of orthogonal persistence on ICP, it will become the preferred way to build robust, scalable applications.

Ready to experience persistence without the complexity? Start building with ICP canisters today.

This guide explores how orthogonal persistence revolutionizes application development. In our next post, we'll dive deeper into practical patterns for building orthogonally persistent applications on ICP.